Humans Have Limits, Machines Don’t: How AI is Supercharging Scammers

Global losses from scams already exceed $1 trillion a year and continue to grow rapidly, with reported fraud losses rising at roughly 25% year on year. Organized crime runs this industry with call centers in purpose-built scam towns, sophisticated money mule networks, and specialized scammer tools all built for scale and efficiency. Now, off-the-shelf AI tools — from large language models to advanced synthetic voice and video generators — are amplifying those advantages.

This post explains what is actually changing because of AI, why humans are no longer able to keep up, and how ScamGuardian is building capabilities that match the threat.

Social engineering, fully automated

Generative AI marks a new era in scammer capabilities, enabling attackers to truly automate complex social engineering interactions. LLMs already excel at activities once reserved for human scammers: running dynamic, convincing, context-aware conversations. These operations can now also be highly personalized, as autonomous agents can collect available data, craft narratives tailored to a victim’s profile and adapt responses in real time. In short, what once demanded a skilled human operator can be handled by AI — faster, cheaper, and often with greater effectiveness.

Goodbye natural senses: indistinguishable synthetic media

Humans are built to rely on sensory cues to establish trust and authenticate people: a familiar voice or video of a trusted person used to suffice. But synthetic audio and deepfake videos now make those signals simply unreliable. ScamGuardian’s earlier educational tests with AI voice cloning tools demonstrated that just a few seconds of recorded voice or a short video clip are enough to convincingly impersonate a familiar person. As a result, when tailored narratives are paired with realistic synthetic media, the normal sensory warning signs fail.

Multi-persona, multi-channel campaigns

Scams do not live in a single message type or platform, and AI makes it trivial to create multiple personas and stitch together text, voice and video across calls, SMS, messaging apps or email. Effective attacks use cross-channel reinforcement: a suspicious SMS might fail, but the same story reinforced by a phone call with synthetic voice or a short video wins the victim’s trust. This cross-channel approach also widens the detection surface — different industries and providers monitor different channels; and collaboration gaps let attackers easily shift to the weakest path.

Industrialization and cost decline

Most importantly, all of the above is now possible at an unprecedented scale. As inference costs fall and scammers’ orchestration tools mature, attackers are bound to replace human operators with AI agents. This converts previously targeted, high‑effort scams into high‑volume, low‑cost operations. The economics are shifting: lower marginal costs per attack enable more attempts, wider and sharper targeting, leading to even stronger incentives for the scam industry to innovate with AI tools instead of being limited by human operators.

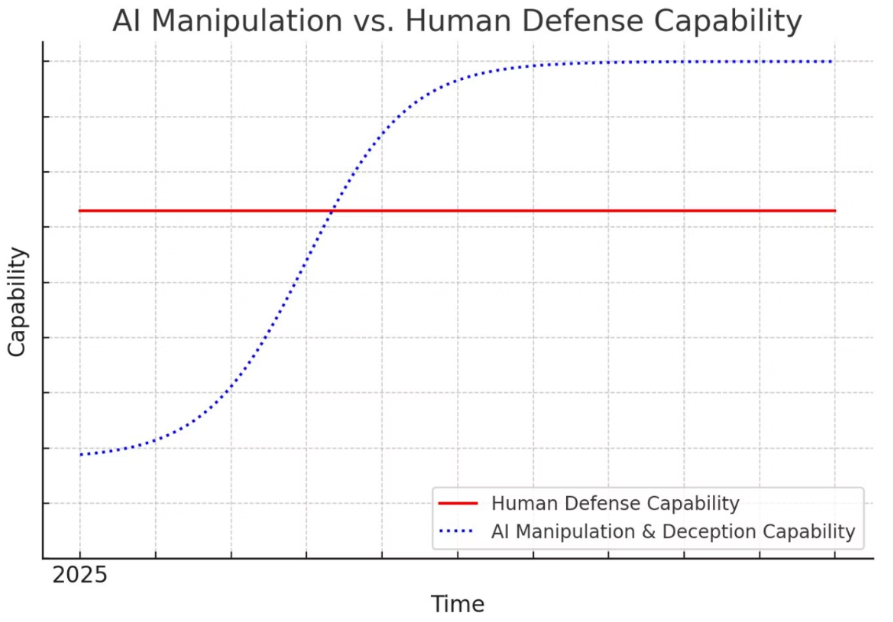

Why current defences fall short: humans are non-upgradable

Most existing defences are designed for yesterday’s adversary: static rules, single-channel detection and a heavy burden on the individual as the last line of defence. This will simply fail against dynamic, multi-modal AI-driven campaigns. And crucially, people are the only non-upgradable part of the scam offense–defense stack: machine capabilities keep accelerating while human perception and decision-making do not. The consequence is a widening gap between attacker capability and defender resilience.

Fighting fire with fire: AI scams require AI defence

This is why we at ScamGuardian.ai have taken on the mission to fix this massive offensive-defensice imbalance by developing capabilities that match the threat. We are deploying AI to fight AI, building large-scale deception and intelligence capture systems to help organizations disrupt scammers across all channels — from telecom operators and payment providers to law enforcement and social media platforms.

Fighting scams is a shared task — no single solution can work in isolation. Get in touch to see how we can work together.